Coding & scoring

On this page

Coding and scoring were the two methods used to assess students’ assessment responses.

Coding is the process of assigning student responses to questions with a pre-determined value. Codes are assigned for a correct response to a question, to different incorrect responses, and to invalid responses, such as leaving the question blank.

Scoring is the process of assigning a code with a quantitative value – a score. For example, a score of one is assigned to codes relating to a correct response and a score of zero is assigned to codes relating to incorrect responses. For each student, the scores are added to give a total score for the assessment.

All three cognitive areas – numeracy, reading, and writing – were coded to enable deep understanding of why students were answering questions incorrectly. Contextual data were not coded as these questions do not have ‘correct’ answers.

Sample coding scheme

Figure RNF#3.1 is an example of a coding scheme. It uses questions similar to those in the PILNA numeracy assessment.

The “descriptor” identifies the concept or skill that is being assessed using a particular question. The “sample response” and “code” columns specify the code that is assigned to a particular response.

The sample responses and codes are developed through an iterative process – first the question developers identify what they anticipate student responses will be.

Then content experts evaluate the codes to identify common misconceptions that provide insight into student thinking. These can be thought of as “incorrect answers that a student might give if their logic was not quite right”.

Finally, field trial results are used to validate and refine the coding scheme.

In the example provided, for item 1, the descriptor is that students are expected to round a given number to the nearest ten or hundred.

The three answers students are most likely to give are 300, 290 and 200 and these are assigned a code of 1, 2, and 3 respectively, while all other responses will be assigned code 0. This is when the response of the student does not meet the requirements for answering the question, such as selecting more than one choice in a multiple-choice question. A non-response or a blank will be assigned a code of 9.

| Question | Responses | Codes |

Item #: 1 Strand: Numeracy Descriptor: Rounding numbers to the nearest tens or hundreds. Round 288 to the nearest hundred. | 300 | 1 |

| 290 | 2 | |

| 200 | 3 | |

| All others | 0 | |

| Blank | 9 | |

Item #: 2 Strand: Operations Descriptor: Solve word problemsIn one year, a year 5 student collected 572 shells. She gave 58 shells to her friend. How many shells does she have left? | 514 | 1 |

| 572-58 | 2 | |

| 630 | 3 | |

| All others | 0 | |

| Blank | 9 | |

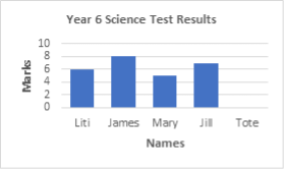

Item #: 3 Strand: Data Descriptor: Identifies and compares everyday information represented in simple graphs

| Bar in correct position and height at 6 | 1 |

| Indicating a mark at correct position 6 | 2 | |

| Drawing straight line to correct position 6 | 3 | |

| Draw 6 bars or lines, but not exactly matching to gradations | 4 | |

| All others | 0 | |

| Blank | 9 |

It is important to note that the coders are asked to observe and record what the students have given as responses. They are not asked to mark each answer as correct or incorrect.

That process, scoring, occurs after values are assigned to particular codes to give full, partial or no credit for specific responses.

Security protocols

Data were coded and validated in-country under strict security protocols.

Each national coordinator identified a numeracy coding panel leader and a literacy coding panel leader, and appointed the members of these two panels in their country. Panel members were selected, based on their experience with assessment scoring, as well as their content knowledge in literacy or numeracy. The national coordinators also identified data entry officers.

The coding and scoring panel members and the data entry officers were trained through virtual facilitation techniques by .

also virtually supported each country’s coding activities and was able to monitor the data entered online.

Data entry officers entered students’ response codes online using Survey Solutions software or on a pre-prepared Excel spreadsheet (if internet was not available). Questionnaire responses were also entered using Survey Solutions software.